AI Search optimization for brand safety: How to control what AI says about you

Language models are becoming a new source of knowledge and brand recommendations. The problem is that their answers can be incomplete, outdated, or entirely incorrect, and users rarely verify their reliability.

That is why message safety in LLMs has become a key challenge today: visibility matters, but controlling reputational risk and ensuring that AI reflects the brand’s message in a way that is consistent with the facts matters even more. So how can you control what AI says about your brand?

Why is it even worth controlling what LLMs say about you?

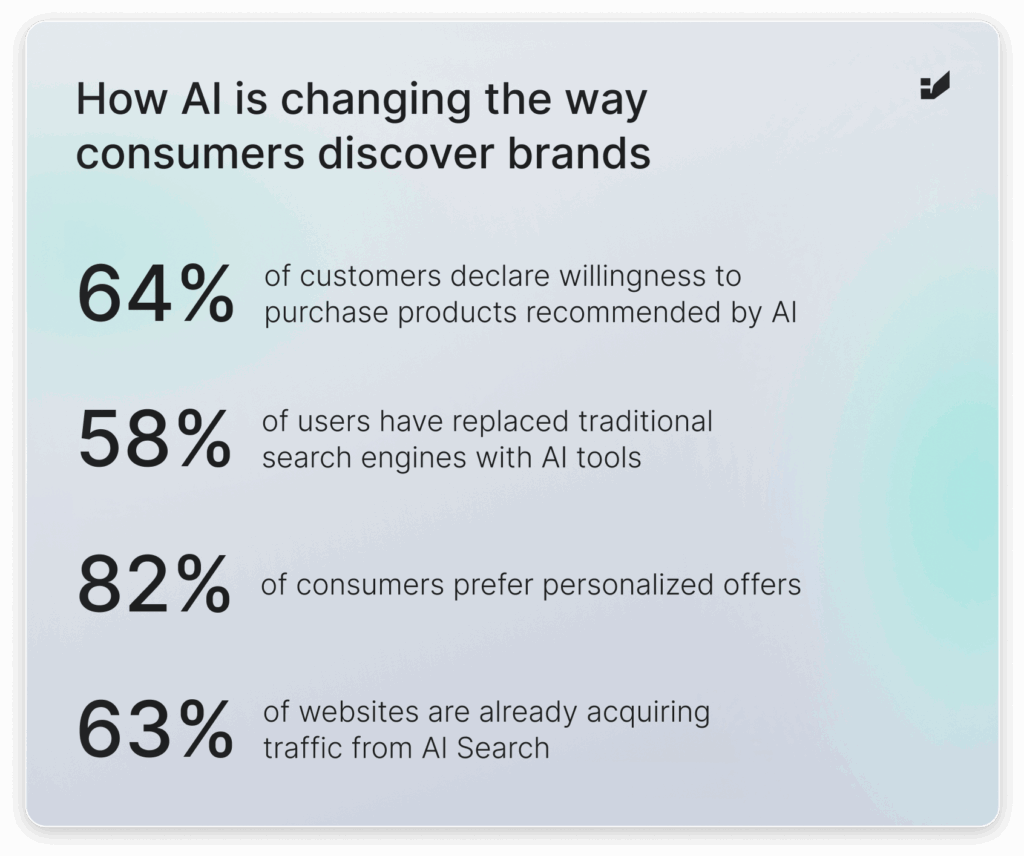

People interact with information differently now. This has already happened. Market data clearly shows that AI is becoming the main filter through which consumers discover brands.

- 64% of customers declare a willingness to purchase products recommended by AI. This means that every mention of a brand in a language model’s response carries sales potential comparable to a direct expert recommendation.

- 58% of users have replaced traditional search engines with AI tools when searching for brands and services. In practice, for more than half of consumers, the LLM becomes the first point of contact with the market.

- 82% of consumers prefer personalized offers, and generative systems can deliver them in ways unavailable to traditional search mechanisms.

- 63% of websites are already acquiring traffic from AI Search, proving that this is not a marginal trend but a lasting transformation of the online visibility ecosystem.

The conclusions are clear:

- If your brand does not appear in AI answers, for many users, it simply does not exist.

- If, on the other hand, it appears but in an outdated or imprecise way, you risk losing reputation and credibility.

Controlling the narrative in AI has become a strategic necessity in building brand safety.

How do generative chats obtain information about brands?

To effectively manage how language models describe your brand, one must understand how they acquire information.

Data sources

- Training datasets: LLMs are built on billions of texts from books, articles, blogs, forums, reviews, technical documentation, and multimedia content. This forms the foundation of their “general knowledge.”

- Real-time data: platforms such as ChatGPT, Perplexity, or Gemini enrich their answers with current sources like forums (Reddit, Quora), reviews (G2, Capterra, Trustpilot), industry blogs, and even YouTube video transcripts.

- Contextual recommendations: ChatGPT in browsing mode or Microsoft Copilot cite websites directly, provided they are considered credible.

Processing mechanism

LLMs do not simply match keywords. Their goal is to understand the user’s intent and generate an answer that sounds natural and covers the topic comprehensively. The process includes:

- Semantic analysis of the query, recognition of entities (e.g., brand names, products).

- Synthesizing information from multiple sources, selecting content that best fits the context.

- Generating a coherent answer, often with elements of recommendations, comparisons, or purchase suggestions.

Vulnerability to errors

This process is not free of risk. Since models rely on vast datasets and do not always verify facts in real time, the following occur:

- hallucinations – fabricated information,

- outdated data – e.g., obsolete product descriptions,

- contextual distortions – confusing similar brands or services.

Case study

Such a situation occurred in one of our projects. A client in the mobile telephony industry, characterized by consistent brand communication and a distinctive Tone of Voice, was generally described positively in LLMs with one exception. One chatbot, when asked for an opinion about the brand, often added the false claim that it did not have a customer hotline. This message appeared even though the client’s website clearly displayed hotline information.

It turned out to be the consequence of a single external publication that provided this incorrect information. Although the brand’s website and the entire surrounding ecosystem confirmed the hotline’s existence, the LLM drew knowledge from one flawed source, thus misleading users.

For this reason, controlling which sources about a brand exist online and in what form becomes critical.

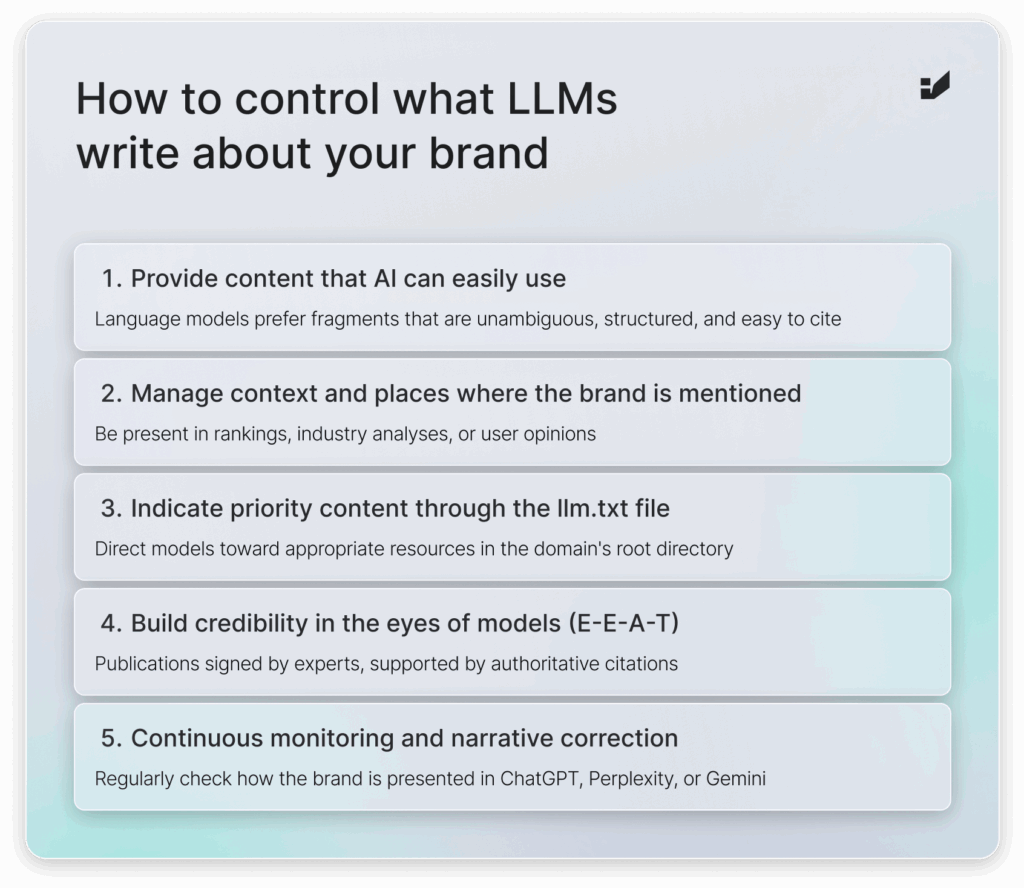

How to control what LLMs write about your brand

To increase control over brand narrative in AI, a systemic approach is needed, combining content, technical, and reputational aspects.

Providing content that AI can easily use

Language models do not pick random sentences. They prefer those fragments of text that are unambiguous, structured, and easy to cite. Therefore, if a brand publishes explanations in a clear structure, answers specific questions, and defines key concepts, it increases the chance that these sources will be used in responses. In practice, clarity of form directly translates into whether brand information will be presented correctly.

Managing context and places where the brand is mentioned

What models say also depends on where they obtain data. AI relies on articles, forums, reviews, and reports. If a brand is present in rankings, industry analyses, or user opinions, its narrative is more likely to be reinforced in answers. The absence of such mentions means models may rely on random, less reliable sources, increasing the risk of distortions.

Indicating priority content through the llm.txt file

Mechanisms that allow website owners to direct models toward appropriate resources are playing an increasing role. The llm.txt file, placed in the domain’s root directory, functions as a signpost: it informs which materials best describe the brand and should be cited first. This helps reduce the risk of models relying on outdated or random sources.

Building credibility in the eyes of models (E-E-A-T)

Models evaluate both the content and its source. They check whether the text is authored by someone with relevant expertise, whether it references research and data, and whether the website has a strong reputation. Publications signed by experts, supported by authoritative citations, and marked with structured data (e.g., Article, Person, Organization) are signals to AI that the content can be trusted. This directly increases the chance that the brand’s narrative will be included in responses.

Continuous monitoring and narrative correction

Even with the best strategy, models can generate errors. Therefore, control also means regularly checking how the brand is presented in ChatGPT, Perplexity, or Gemini. If inaccuracies are detected, one must respond by creating updated content, publishing corrections, or expanding FAQ sections. In this way, models are provided with correct information, which over time displaces erroneous narratives.

Next Steps for Brand Protection in AI

The year 2025 marks a new reality in digital marketing. AI Search Optimization has become necessary due to the growing role of generative language models in consumer decisions.

Brands that ignore this area risk losing visibility and reputation. Those who act will gain a competitive advantage, becoming the natural choice recommended by AI systems.

AI is now recommending brands, not just searching for them. How soon will you take charge of your AI story before your competitors?